- Nvidia is the world's most popular maker of artificial intelligence chips.

- It has released its latest chip, which is claimed to be nine times faster than its predecessor.

- Only AMD has a chance to challenge Nvidia's AI chip dominance

If there's one company currently (or even in the foreseeable future) dominating the tech industry, it's Nvidia. The tech giant's products, especially AI chips, are the industry's most valuable resource. So much so that NVIDIA's market capitalization has surpassed $1 trillion, making it the most valuable chipmaker in the world, signaling a clear boom in demand for AI.

The company is the biggest beneficiary of the rise of ChatGPT and other generative artificial intelligence (AI) applications, almost all of which are powered by its very powerful graphics processors. Prior to this, Nvidia's chips were also widely used to power traditional AI systems, and during the cryptocurrency boom, demand for the chips increased as systems in the industry also relied on their processing power.

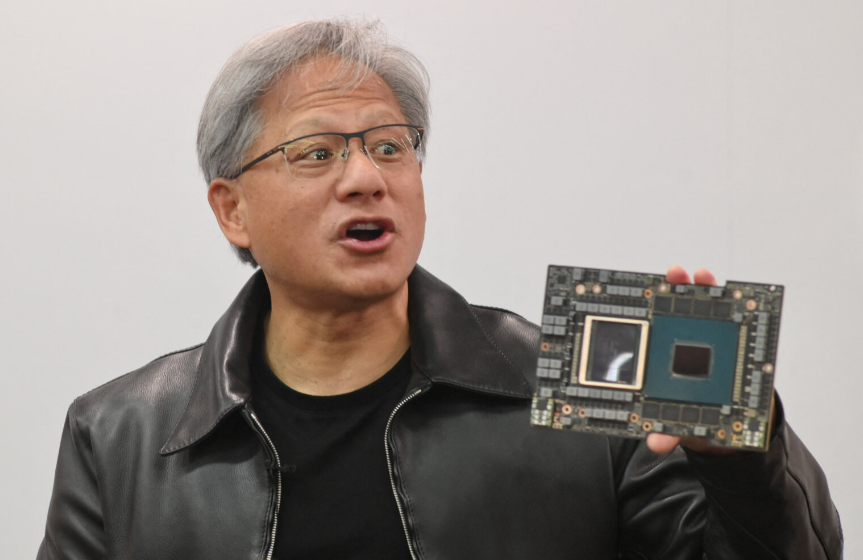

Nvidia monopolized the AI market with its chips, and it didn't start in the post-ChatGPT era. Nvidia founder and CEO Jen-Hsun Huang has been so certain about AI in the past that most of his certainties have felt like high-profile rhetoric or marketing gimmicks.

How NVIDIA Got Ahead of the Curve to Reach the AI Chip Inflection Point

NVIDIA, led by Jen-Hsun Huang, has always been on the cusp of development - first gaming, then machine learning, then cryptocurrency mining, data centers, and now artificial intelligence. Over the past decade, the chip giant has developed a unique portfolio of hardware and software products designed to democratize AI and enable the company to benefit from adopting AI workloads.

But the real turning point came in 2017, when Nvidia began adapting GPUs to handle specific AI calculations. That same year, Nvidia, which typically sells chips or boards for other companies' systems, also began selling complete computers to perform AI tasks more efficiently.

Some of its systems are now the size of supercomputers, assembled and run using proprietary networking technology and thousands of GPUs. Such hardware may run for weeks to train the latest AI models. For some competitors, competing with companies that sell computers, software, cloud services, trained AI models and processors is very difficult.

By 2017, tech giants like Google, Microsoft, Facebook and Amazon had bought more Nvidia chips for their data centers. Organizations like Massachusetts General Hospital used Nvidia chips to spot anomalies in medical images like CT scans.

By then, Tesla had already announced that it would install Nvidia GPUs in all of its cars to enable autonomous driving. nvidia chips provide a strong base of capabilities for virtual reality headsets, like the ones that Facebook and HTC are bringing to market. Nvidia has a reputation for consistently delivering faster chips every few years.

Looking back, it's safe to say that for more than a decade, Nvidia has established a nearly impenetrable lead in producing chips that perform complex AI tasks like image, facial, and speech recognition, as well as generating text for chatbots like OpenAI's ChatGPT.

The biggest benefit? NVIDIA achieved its dominance by recognizing AI trends early, customizing its chips for those tasks, and then developing the critical software that helps AI development. As the New York Times (NYT) notes, NVIDIA has gradually transformed itself into a one-stop store for AI development.

While Google, Amazon, Meta, IBM and others also produce AI chips, Nvidia now accounts for more than 70 percent of global AI chip sales, according to research firm Omdia. It's even more prominent in training generative AI models. Microsoft alone has spent hundreds of millions of dollars on tens of thousands of Nvidia A100 chips to help build ChatGPT.

In an article by Don Clark of the New York Times, he said, "By May, the company's position as the clearest winner of the artificial intelligence revolution had become clear, with quarterly revenues projected to grow by 64 percent, far outpacing Wall Street's expectations."

Generative AI's 'iPhone moment': Nvidia's H100 AI chip

"We're in the iPhone moment of AI," Jen-Hsun Huang said at the GTC conference in March. He was also quick to point out Nvidia's role in the beginning of this AI wave: he brought a DGX AI supercomputer to OpenAI in 2016, and that hardware was eventually used to build ChatGPT.

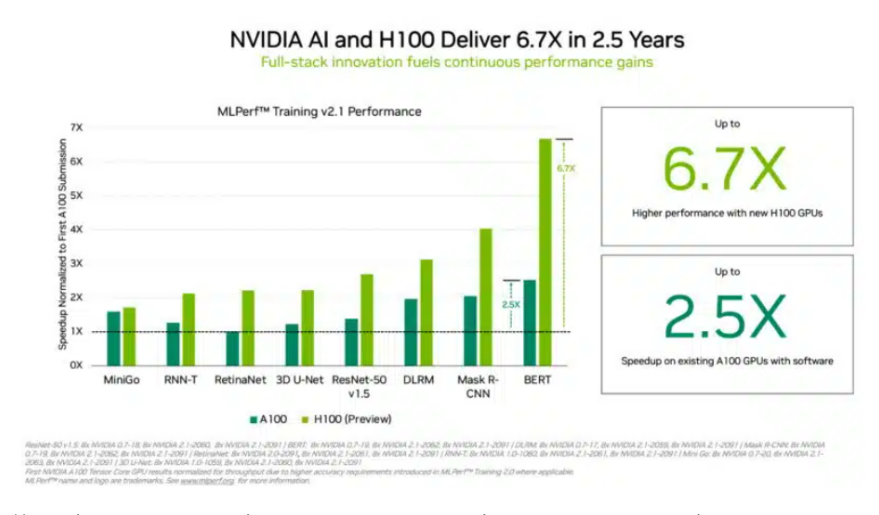

At GTC earlier this year, Nvidia unveiled the H100, the successor to Nvidia's A100 GPU, which underpins modern large-scale language modeling efforts, and Nvidia claims that its H100, as a GPU, delivers AI training that's up to nine times faster than the A100, and inference that's up to 30 times faster than the A100.

While Nvidia did not discuss pricing or chip allocation policies, industry executives and analysts have said that each H100 costs between $15,000 and more than $40,000, depending on packaging and other factors, and is roughly two to three times the cost of the previous generation A100 chip.

However, H100 continues to face severe supply shortages amid soaring demand and easing shortages in most other chip categories. Reuters reports that analysts believe Nvidia will only be able to meet half of the demand, with its H100 chips selling for twice the original $20,000 price tag. According to Reuters, this trend could continue for several quarters.

There's no denying that the surge in demand is coming from China, where companies are stockpiling chips due to U.S. chip export restrictions. The Financial Times reports that leading Chinese Internet companies have ordered $5 billion worth of chips from NVIDIA. The supply-demand divide will inevitably lead some buyers to turn to NVIDIA's rival AMD, which is looking to challenge the company's most powerful AI workload offering with its M1300X chip.

What's next?

As NVIDIA shares have tripled this year and the company's market valuation has increased by more than $700 billion, making it the first chip company to be worth trillions of dollars, investors are expecting the chip-designer to forecast higher-than-expected quarterly revenue when it reports results on Aug. 23rd.

NVIDIA's second-quarter earnings will be the biggest test of the artificial intelligence hype cycle. "What NVIDIA reports in its upcoming earnings report will be a barometer for the entire AI hype," Forrester analyst Glenn O'Donnell told Yahoo. "I expect the results to be excellent because demand is so high, which means NVIDIA can make higher profits than other companies."

On the supply side, NVIDIA won't be the only supplier, but what puts the company in a better position than its rivals is its leading position and patent-protected technology, which has been proposed at an early stage. Some market experts believe that AMD is the "only viable alternative" to Nvidia's AI chips, at least for the time being.